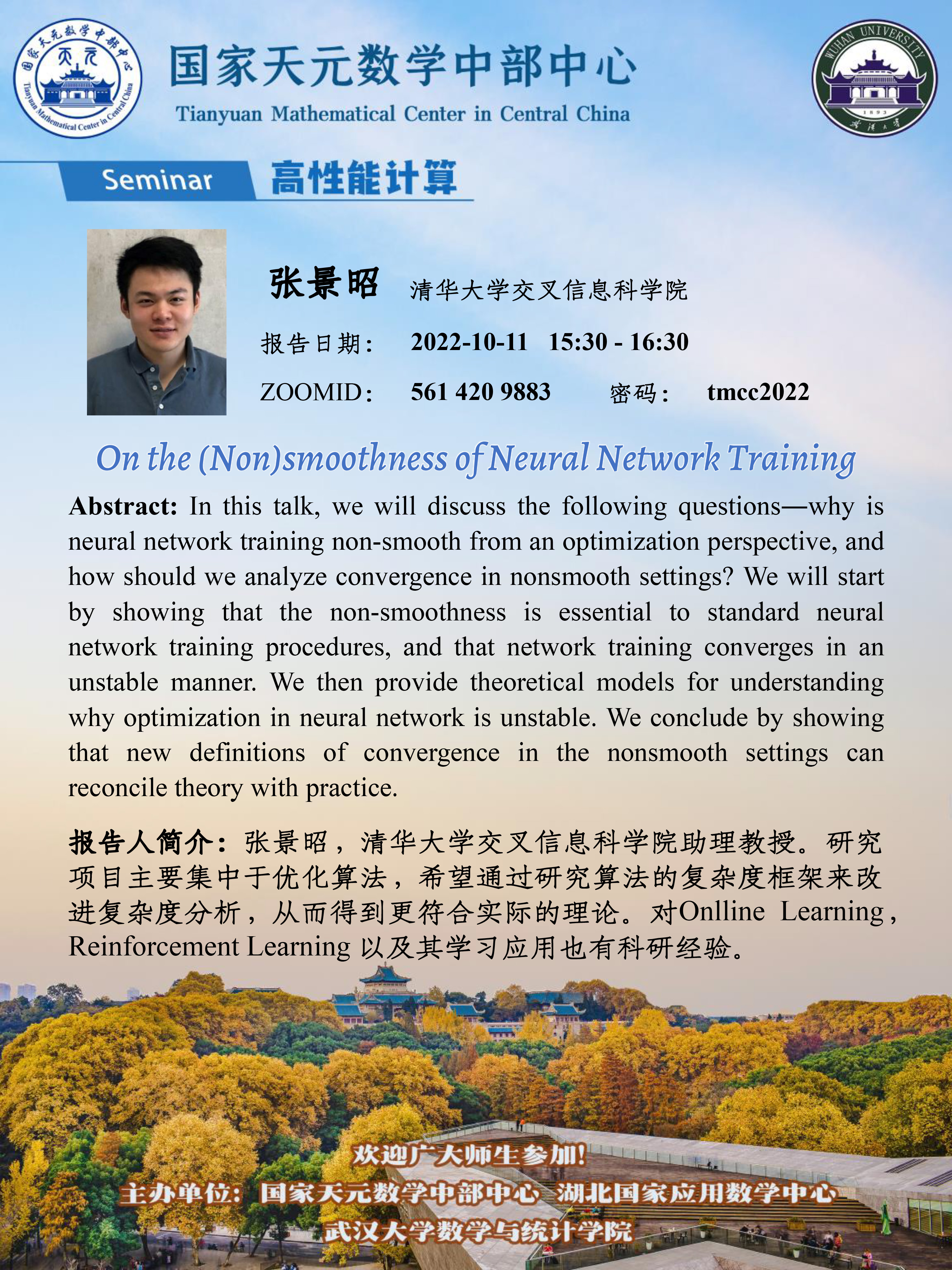

报告题目:On the (Non)smoothness of Neural Network Training

报告时间:2022-10-11 15:30 - 16:30

报告人:张景昭 清华大学交叉信息科学院

ZOOMID:561 420 9883 密码:tmcc2022

Abstract: In this talk, we will discuss the following questions―why is neural network training non-smooth from an optimization perspective, and how should we analyze convergence in nonsmooth settings? We will start by showing that the non-smoothness is essential to standard neural network training procedures, and that network training converges in an unstable manner. We then provide theoretical models for understanding why optimization in neural network is unstable. We conclude by showing that new definitions of convergence in the nonsmooth settings can reconcile theory with practice.